Operations Are Being Throttled on Elb Will Try Again Later

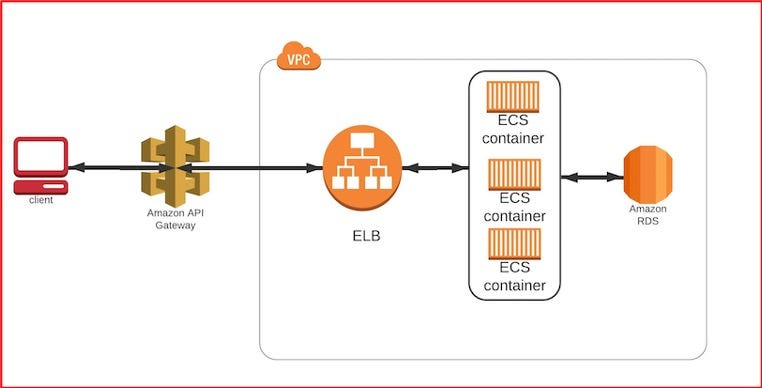

Deploy Microservices Using AWS ECS Fargate and API Gateway

Why ECS Fargate?

- No demand to manage and provision server

a. ECS volition always keep your containers up to engagement with required security and OS patches.

b. ECS will automatically scale-up and scale-downwards the infrastructure every bit per usage. - Resource Efficiency and Price saving

a. Resource requirements every bit per your demand to run each service. This style resources are never over or nether provisioned to run the service.

b. This comes with a nifty discount also. Cheque AWS documentation for fair pricing. - Security by Pattern

ECS tasks runs in their own dedicated environment and do not share CPU, retentivity, storage, or network resources with other tasks. This way traffic can exist restricted to each task and creates a workload isolation. - No need to worry about underlying host

Many services can be run as per requirement without worrying most the underlying host chapters. This is the biggest reward to use fargate over non-fargate containers equally no need to limit services based on the underlying host capacity. - Rich monitoring

ECS Fargate is having default integration with AWS CloudWatch Container Insights. Using this you can go logs and setup monitoring as per your need. - Better performance and easy deployment

a. Containers can exist started and stopped in seconds as per need.

b. Equally ECS uses the docker underlying, services can be deployed to ecs easily without changing the code.

What volition nosotros achieve in this article?

- Nosotros will deploy services in ECS Fargate containers.

- We will expose the service using AWS API gateway.

- We will deploy a DB in an RDS case.

- We will setup the monitoring on ECS containers to notify for any failures or issues.

What y'all should already know?

- Basic understanding of docker and have a docker surroundings setup on your laptop.

- Understanding of microservices and how to build docker images out of it.

- Bones agreement of AWS RDS and how to create DB schema.

- You should have an AWS account and basic understanding of Networking and security in AWS.

- Bones understanding nearly load balancing and API gateway.

Prerequisites?

- one. You should be having your own VPC created with minimum 2 public and 2 private subnets and a bastion host to access your internal DB.

- You should be having 2 set of microservices (Example: one for students and i for subjects) and each fix should exist packaged in a docker paradigm

- Your microservices can communicate internally with each other (this will be explained in inter-service communication section).

How will we achieve this?

- Create a Mysql RDS instance

- Create ECS Fargate cluster.

- Create AWS ECR Repository.

- Upload the docker images to AWS ECR.

- Create Awarding load-balancer.

- Deploy images in ECS Fargate every bit containers.

- Setup inter-service communication with ALB.

- Expose API endpoints via API gateway for private endpoints.

- Setup Cloud watch alarms for monitoring.

Create MYSQL RDS instance

- Login to AWS Console.

- Choose your preferred regions, like u.s.a.-due west-2

- Go to RDS service and create a Mysql Database (not-public with in your VPC with private subnets) from there.

- Create the schema inside Database server and configure equally per your services requirement .

Create ECS Fargate cluster

"Fargate cluster" needs to exist created commencement, under which services tin be deployed inside containers.

- Login to AWS Console.

- Choose your preferred region, similar us-west-q ii.

- Go to ECS Service Folio.

- Click on "Create Cluster" button.

- Select "Networking just" and click "Next".

- Provide a name like "ecs-fargate-cluster-demo".

- Don't Select "Create VPC" equally we will be using existing VPC.

- Select "CloudWatch Container Insights" check box and click create.

ECS Fargate cluster is created. Isn't piece of cake? You can accept a look at your cluster.We have not deployed anything at that place then, you volition see an empty container.

Create AWS ECR Repository

ECR repository is a private docker registry. You can upload your docker images inside ECR repository ( preferably) but you can use your docker hub repo too. This service is highly secure and having loftier availability. For more than information you can check AWS documentation on this.

- Inside ECS Landing page, you volition find a link to go to ECR repository in left menu or you can go directly by using like hither.

- Click on create repository and provide the name "demo1" of the repository here ( Similar for Students docker image).

- Click on "create repository" button.

- Create some other repository with proper noun "demo2" (Similar for Subjects docker image).

Upload your docker images to AWS ECR

Time to upload docker images in ECR repository created above.

We take created ii ECR repository for two docker images to showcase "inter service advice".

You can accept services those needs to communicate between each other before they respond to customer.

Make certain you take AWS CLI setup on your laptop.

- Become the push button commands to upload these images to your ECR repo.

a. Go to your ECR repository console.

b. Select the repository and click on "View push button commands".

c. It volition open a popup and will prove all the commands yous need to push the images to ECR.

d. Push button demo1 image in demo1 repository and demo2 image to demo2 repository

Create Application Load Balancer

There are multiple means to setup inter service communication where one service communicates with other service. In the micro-service architecture, one service don't know the location of the other services and can't connect automatically as each service is located in its own container and having a dissimilar private IP address. In the world of containers, container's IP addresses can't be reliable as they can be changed if created again.

There are various ways to handle this but "Application load balancer" is much more efficient and simple to apply. Each service volition annals itself to Application load-balancer starting time and and so service can telephone call the "Application load-balancer" along with its service path to communicate with other service.

Like: http://<awarding load balancer DNS name>/pupil

Where "/student" will be the path of the other service, registered in "awarding load balancer".

When you lot have multiple containers of each service, application load balancer volition redirect the traffic to least used node and this way information technology will make sure load is ever balanced between each container holding same service.

Let's create one Application load-balancer at present.

- Login to AWS panel.

- Go to EC2 Console and select the desired region where you lot have created your VPC to a higher place.

- Click on "Load Balancers" from the left menu.

- Click on "Create Load Balancer" button.

- Click "create" for "Application Load balancer".

- Fill the form with the below information

a. Scheme: Internal (This is because we will exist exposing all services using API gateway not directly via load balancer.)

b. IP accost type: ipv4

c. Load Balancer Protocol: 80

d. VPC: Select your created VPC.

e. Availability Zones: Select all available zone and select "public subnets" but. - Click on "Next: Configure Security Settings" push button.

a. As we are using listener port as 80 and not 443 (with ssl), we will non get the pick to setup security configurations. In production you lot should always use 443 as port with ssl and configure these settings. - Click on "Configure Security Groups" push.

a. Assign a security group: "Create a new security group"

b. Security group name: Requite whatever name. Similar: "ecs-fargatel-elb-grp" - Click on "configure routing" push button.

Permit's create one "Target Group" now for one service and will create and attach another one later on.

a. Name: ecs-fargate-demo1-tg

b. Target type: IP

c. Continue all setting default except Path for "Health Cheque".

Path = /health-check - Click on "Adjacent: Register Targets" push (As nosotros have not deployed any service notwithstanding, we don't accept any target. We volition setup this afterwards).

- Click on "Next: Review" and hit "Create".

This will create the "Application load balancer" in some time along with one target group "ecs-fargate-demo1-tg"

Nosotros have added health check path equally "/health-check". "Target grouping" keeps hitting this path in regular intervals defined in health-checks to get the health of the service. Nosotros should create "/wellness-cheque" path in each service just for this purpose. Target group will get 200 as response code once it hits this endpoint for whatever service deployed in containers.

Go to "Target Group" page and create another target group with proper name "ecs-fargate-demo2-tg" with same configuration for other service.

Deploy images in ECS Fargate as containers and setup inter service communication

We need to deploy the pushed images to create containers now.

Let's understand and create the beneath three IAM roles earlier we create whatever container.

- Task Role: This role is used to provide access to your services deployed in ECS containers to communicate with other AWS services. "AmazonECS_FullAccess" policy is required for this role to work.

- Task execution office": This role is required past tasks to pull container images and publish container logs to Amazon CloudWatch on your behalf. "AmazonECSTaskExecutionRolePolicy" policy is required for this office to work.

- ECS Auto Scale Role: This office volition exist used to let ECS to auto-scale ecs tasks based on load. "AmazonEC2ContainerServiceAutoscaleRole" policy is required for this role to work.

Now, Let's empathize some of the popular terms used in ECS.

- Task Definition: This is defined very beautifully hither. This defines the configuration of the container which holds your service. Like: memory, CPU and which image should exist used etc.

- Task: Runtime case of "Task Definition".

- Service: This defines the fashion your "Task" volition behave in the ECS cluster. You can configure "Auto scaling", setup inter service communication using "Application load balancer". You tin can also define the number of tasks for each "Chore Definition" and their deployment strategy for handling load/availability. Information technology's kind of an orchestration engine for your containers.

You tin directly deploy "Task Definitions" and create Chore out of it. But you will non be able to take the advantages offered by Service. You lot need to manually setup the inter service communication and can't apply auto scaling.

Allow's deploy the images nosotros accept pushed in ECR

Create Task Definitions

- Login to AWS Panel.

- Go to ECS Console and select the desired region where y'all take created your VPC above.

- Click on "Task Definitions" from the left menu.

- Click on "Create new Job Definition" button.

- Select "Fargate" as launch blazon.

- Click on "Side by side Stride" and make full the form with below data.

a. Task Definition Proper noun: ecs-fargate-demo1-td

b. Task Role: This is the IAM function we take created in a higher place.

c. Network Fashion: awsvpc

d. Task execution role: This is the IAM role nosotros have created in a higher place.

e. Task memory (GB): 0.5 GB (This is the maximum memory your job tin can swallow).

f. Task CPU (vCPU): 0.25 vCPU (This is maximum CPU units your task can consume). - Click on "Add Container".

a. Container name: "ecs-fargate-demo-ane-container"

b. Image: ECR repository URL. You lot can get the URI from ECR repository y'all have created above.

c. Memory Limits (MiB): Soft limit: 100 MiB

d. Port mappings: fourscore

e. Leave all settings as default and hitting "Add". - Click "Create".

This will create the task definition for one paradigm and create the similar task definition for another pushed image.

Create Service

- Select your created "Task Definition" to a higher place.

- Select Action "Create Service".

- Fill the form like below:

a. Launch type: Fargate

b. Service name: ecs-fragate-demo1-service

c. Number of tasks: two

d. Minimum healthy percentage: 50

east. Maximum percentage: 100 - Click on "Next step"

- Fill up the class like below

a. Cluster VPC: Select your VPC.

b. Subnets: Select private subnets (Case under this subnet tin can't be accessed from outside direct).

c. Security groups: Click edit and create a new security group

1. Security group name: "ecs-fargate-containter-sg"

2. Inbound rules for security group: Add together the new custom dominion for port lxxx and add "Awarding load balancer" security group nosotros have created above equally source.

3. Hitting save to create security grouping.

d. Auto-assign public IP: Disabled.

east. Load balancer blazon: "Application Load Balancer".

f. Load balancer proper noun: ecs-fatgate-elb.

g. Click on "Add together to load balancer".

ane. Product listener port: Select the listener port we have created to a higher place.

2. Target group name: ecs-fargate-demo1-tg

h. Uncheck "Enable service discovery integration" - Click on "Next Step".

- Service Auto Scaling: Configure Service Machine Scaling to adjust your service'south desired count.

a. Minimum number of tasks: 2

b. Desired number of tasks: ii

c. Maximum number of tasks: iv

d. IAM role for Service Machine Scaling: ECS Car calibration IAM function we take created in a higher place

e. Scaling policy type: Step scaling

f. Execute policy when: Create new Warning

f. Scale out

1. Warning name: ECS_Fargate_Container_Scaleout_CPU_Utilisation

2. ECS service metric: CPUUtilisztion

3. Warning threshold: Minimum of CPUUtilisztion > 70 for ane consecutive periods of 5 mins.

four. Scaling activeness: Add together i Tasks when 70 <= CPUUtilisztion

g. Calibration in

1. Alarm name: ECS_Fargate_Container_Scalein_CPU_Utilisation

2. ECS service metric: CPUUtilisztion

3. Alarm threshold: Minimum of CPUUtilisztion < l for 1 consecutive periods of 5 mins

4. Scaling action: Remove one Tasks when 50 > CPUUtilisztion - Click on Side by side Stride and Create the service.

Permit'southward empathize the above configurations.

- Minimum and Maximum healthy percent:

a. This will configure the number of tasks should exist present at any given time.

b. During deployment, ECS will showtime bring downward the number of tasks defined via "Minimum healthy percent" and start the new tasks with new "task definition version" and once the health cheque of "Target group" is passed it will bring downwardly the residuum of the containers and start new 1 based on "Maximum salubrious percent". This mode your service will ever be available at any given time. - Security groups: Nosotros have created a security grouping for the tasks and allowed only "Awarding load balancer" to communicate with them

- Car-assign public IP: This fashion nosotros made sure that all our containers will not be accessible outside network.

- § Service Machine Scaling: In example of CPU utilisation of the container is more lxx% for continues v mins, then it will add one more task. In case CPU utilisation of the container is less than 50% for continues 5 mins, so it will remove one task. Simply the minimum and maximum number of tasks volition always be maintained.

You can employ the aforementioned configuration for creating another Service and configure "Motorcar scaling" with existing alarms we have created above.

This mode we have deployed our services in ECS clusters. Merely still inter service communication is not complete as we have not divers the paths in Awarding load balancer (ALB).

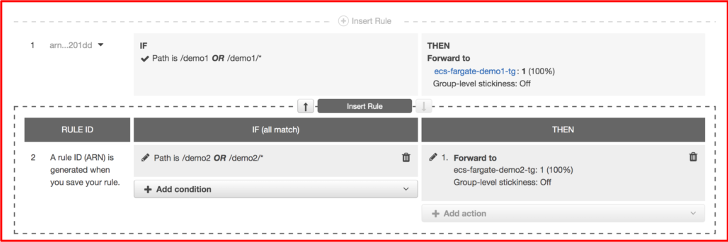

Setup inter service advice with ALB

With previous steps, we have fabricated sure that each task will register itself with ALB while creating. Merely we take non yet setup the API calls directions to services based on the paths like below:

If i service makes a call like "http://<ALB NAME>/demo1" information technology should reach to demo1 service and if call is like "http://<ALB Name>/demo2" then it should reach to demo2 service.

- Become to EC2 console and click on "Load Balancers".

- Select your created ALB and go to "Listeners" tab.

- Click on "View/edit rules"

- Click on "+" sign and Insert a dominion below.

a. Add Status: Path

i. is /demo1

two. or /demo1/* - Click ok

- Add Activity beneath

a. Forward to

b. Select Target Group: ecs-fatrgate-demo1-tg - Click ok.

- Add another rule for "demo2" service path and configure their target grouping "ecs-fatrgate-demo2-tg"in action and relieve the rules.

Expose API endpoints via API gateway

AWS API Gateway is managed service for creating and publishing APIs with security and scale. API Gateway is capable of handling hundreds of thousands of concurrent requests.

Nosotros will betrayal our services equally Rest API via "API Gateway". Our services are hosted inside VPC and tin can't exist accessed via cyberspace currently. Nosotros need to use "API Gateway" private integration to betrayal our private endpoints via "API Gateway".

Create Network Load Balancer

- Login to AWS console of EC2 on the same region where you take created VPC.

- Select "Load Balancers" from the left menu.

- Click on "Create Load Balancer" button.

- Fill the details like below

i. Proper noun: "ecs-fargate-nlb"

ii. Scheme: internal

iii. Load Balancer Protocol: TCP: 80

4. VPC: Select your VPC.

v. Availability Zones: Select all the bachelor zones

vi. Subnets: Select all the public subnets we have created - Click "Next: Configure Security Settings"

- Click "Next: Configure Routing".

- Create the target group similar below

i. Name: ecs-fargate-nlb-group

ii. Target type: IP

iii. Protocol: TCP

4. Port: 80 (On this port ALB is listening). - Click "Next: Register Targets".

- Nosotros will add together ALB IP addresses afterwards.

- Click "Next: Review" and hit create to create the NLB (network load balancer).

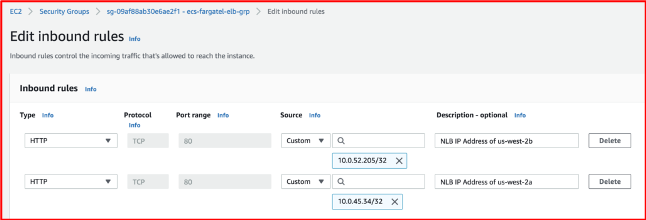

Setup the Integration between NLB and ALB

API gateway will ship the traffic to NLB and NLB will road the traffic to ALB. Nosotros demand to setup this communication. To exercise so, we need to find the IP addresses of both ALB and NLB. You tin check this link to get the IP addresses. Every bit both are added in multiple subnets, both will have multiple IP addresses.

- Add the ALB IP addresses in NLB target group we have created above with name "ecs-fargate-nlb-grouping".

- Add the IP addresses of NLB in security grouping of ALB we created above as entering rule on port 80.

Create VPC Links

Nosotros need to create "VPC Links" in API Gateway to ship traffic to NLB, created above.

- Login to API Gateway console.

- Click on "VPC Links" option in left carte du jour.

- Click create and fill the required details like below:

a. Select "VPC link for Rest APIs" and click create.

b. Name: ecs-fargate-vpc-link

c. Target NLB: Select the NLB created above.

d. Click create to create the VPC link

Create Rest API

- Login to API Gateway console.

- Select "APIs" from left bill of fare.

- Click on "Build" for "Rest API".

- Select "Rest" and "New API".

- Give a name like "ecs-fargate-api".

- Endpoint Type: Regional

- Click create.

- Click on "Actions" and select "Create Resource".

- Requite the name as "pupil" and click on "Create Resource".

- Select the resource and Click on Deportment.

- Click on "Create Method".

- Select "POST" from driblet down and click "ok" next to it

a. Select "Integration Type" equally "VPC Link"

b. Select "Use Proxy Integration"

c. Method: Postal service

d. VPC Link: Select the "VPC Link" we have created above.

e. Endpoint URL: http://<DNS Proper noun of ALB>/educatee/

f. Hit Save - Select the resource "student" and Click on Actions.

- Click on "Create Resources".

a. Resource Proper name: {student_id}

b. Resources Path: {student_id}

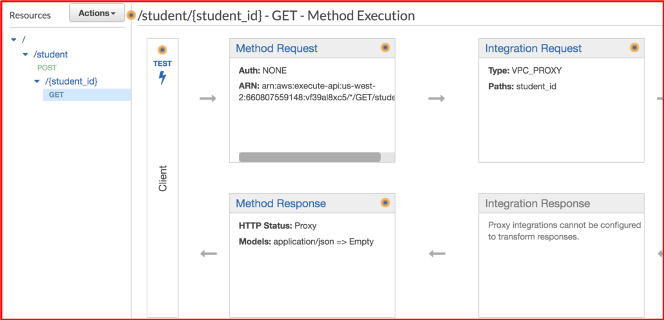

c. Striking "Create Resource". - Select the resource "{student_id}" and Click on Actions.

- Click on "Create Method".

- Select "Go" from drop down and click "ok" side by side to it.

a. Select "Integration Type" as "VPC Link"

b. Select "Use Proxy Integration"

c. Method: Get

d. VPC Link: Select the "VPC Link" we have created above.

eastward. Endpoint URL: http://<DNS Name of ALB>/pupil/{student_id}

f. Striking Save

Allow'south Deploy the API and test the API

- Select Deportment and select "Deploy API"

- Deployment stage: [New Phase]

- Stage proper name: Test

- Hit Deploy to deploy the API.

Copy the invoke URL and test in postman.

There are lot nosotros tin do with API gateway. Few are listed below:

- Add Authorizer to authorize the requests of APIs.

- Y'all can check your headers, asking trunk to validate the request.

- Y'all can update the response in case of errors.

- Add together Custom name of the API.

- Create multiple versions of the API.

- Add Firewall to restrict the requests based on different conditions.

- Add throttling of APIs based on the customer applications.

- Add together logging and monitoring to go the insights of the APIs.

arcandshouthat1978.blogspot.com

Source: https://medium.com/adobetech/deploy-microservices-using-aws-ecs-fargate-and-api-gateway-1b5e71129338

Post a Comment for "Operations Are Being Throttled on Elb Will Try Again Later"